Now you have established what type of penalty has hit your site, it's time to look into whatever caused it. Once you get to the root of the problem, it can be fixed, no matter what it is.

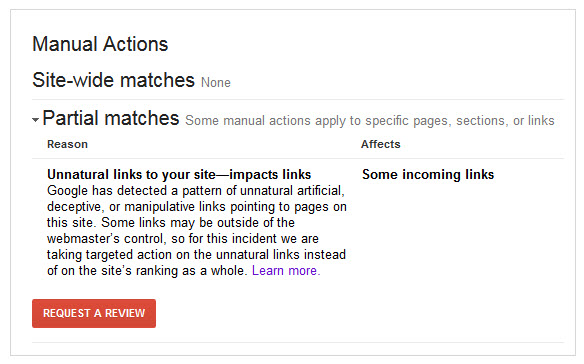

If you've had a manual penalty, you may receive a message in Webmaster tools or even an email giving suggestions as to what you could do to improve your chances. Go into Webmaster tools and go to "Search Traffic > Manual Actions".If your site has a shady link profile, you might see something like this:

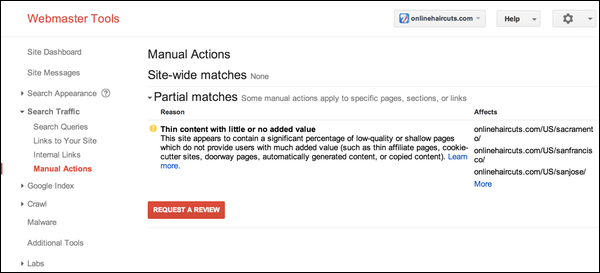

If, however, the offense is down to the content on your site, you might see something like this:

If you've sustained an algorithmic penalty, and haven't received any notification from Google, then unfortunately you won't receive any direct help from Google in figuring out what the problem is.

Regardless of what state your site is currently in, there is almost always room for improvement. In this chapter we'll discuss a comprehensive list of potential penalty-triggering offences.

Note: We won't go through every known factor that will affect your sites performance. Many factors will only affect you positively, such as well-structured page mark-up, domain age and authority, or site speed. While you might be missing out on potential rankings by not including positive SEO factors, failure to include them is never going to get your site penalised. For now, we'll focus on the most significant negative SEO factors that you may have used, and we'll leave the positive SEO for Chapter 7.

We typically see offenses that fall into three categories. On-site content, Off-site backlinks, and technical issues with the code.

Once you have a good understanding of the information in this chapter, you'll be able to use the next chapters on reversing the penalty much more effectively.

Algorithms struggle to differentiate between good content and bad content. Google have been refining theirs for decades, but it's still going to be a very long time before we develop an algorithm that can actually understand text like we do.

Instead of general quality of text, there are tell-tale signs nestled within page content that a search engine's algorithm can pick up on, signs that result from certain patterns that are consistent with spammy content (such as regular typos, excessive advertising or blatant plagarism), or lack of certain patterns which good content tends to have.

Most of the time, if these signs build up to a certain threshold, a site with poor content may be marked for a manual review, at which point an employee will easily see if the site is legitimate and merely unlucky, or if it's trying to "game the system" with its text.

Sometimes, these tell-tale signs can be so large in number that a serious penalty is triggered automatically. Things like keyword stuffing, large scale duplicate content or doorway pages are all amongst many things that can easily be detected algorithmically.

That being said, let's get clear on what the most significant amongst these tell-tale signs are.

One of the easiest things for an algorithm to use to identify low quality or scraped content is to run a check against the other content on the web, and see if content on your page was copied from or duplicated elsewhere.

You can check to see if your content has been copied elsewhere by typing your URL into CopyScape.

Use CopyGator if you want a second opinion.

Content spinners are black-hat SEO tools that "spin" out an article many times, using synonyms to repeat and duplicate each sentence in a variety of different ways.

The problem is for humans they usually read horribly. Often they don't even make sense. Check out this vaguely hilarious copy from a sales page posted in Google's own forums.

Not particularly convincing, and generally easy, even for an algorithm to spot.

Spun articles have a chance of going under Google's radar, but they are always a significant risk. Software can quantify just how "unique" an article is, and of course a spun article is never 100% unique. As a general rule, an article that is less than 80% unique when compared with another similar article on the web will easily be flagged by the search engines.

If you've used spun content before, take it down and have it re-written (by a human, this time)

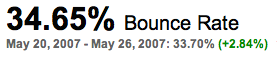

A flag for potential thin content is a high bounce or return to search engine result page, and very little time spent on site. Essentially, if you're getting a lot of visitors who take one look at your site and flee back to Google, it may be mark against you, it is often theorised that Google monitor Return-To SERP data as a way of pinpointing bad results.

You can see bounce rate and average times on site in Google Analytics. However, take the figures you see there with a grain of salt. Analytics is well known for being a little inaccurate with regard to these two figures.

A preferable "metric" for this factor is your intuition.

You know your market. You've talked to them and you know their needs. When you read your site, do you think they'll find it useful? Are you adding anything or just pulling and collating information from elsewhere?

Whatever your answer, what could you do to make their time on your site even more useful?

When shady SEO was first practiced it was often done by stuffing pages with whichever keyword(s) you wanted to rank for. Search results began to be full of whoever had the most mentions of the word you typed in. Whoever had "credit card" written the most times on their site was often ranked for the keyword "credit card". Search engines quickly improved, and at this point in time, keyword stuffing is an easily spotted offense.

It’s a game of balance. You want to be relevant to a search, but not look like you're "trying" too hard. You can easily go too far by having the keyword in your domain name, URL, a liberal sprinkling in your content, in your page titles and a few subheads, and in the file names of a few images.

To protect against over-optimisation, use synonyms and related keywords.

Include the target keyword a few times, (you'll almost certainly find that you do this naturally anyway) and include synonyms or slight variations whenever possible, particularly in the text content, headings and header tags, not only will this improve your keyword targeting, but will also make your pages relevant for a much wider range of phrases and long-tail keywords.Another relic from a by-gone era, this penalty factor was introduced when search engines caught wind of people smuggling a ton of invisible keyword repetitions into a page.

They were camouflaged by matching the text colour with the colour of the background or using hidden divs.

Clever, eh?

Not anymore. Keep an eye out for this happening accidentally. It's been known to. If at any point the colour of the text is too similar to colour behind it, you may be in danger.

One of the primary goals of the Panda Update was to take down the content farms that had started to populate the web, pushing better-quality sites further down the search results.

This relates to any user generated or auto-generated content that is not useful, or purely there to capture search traffic and serve visitors advertising.

This is why, if you ever feel possessed to post on one of the surviving content farms like Hubspot or Squidoo, you'll be continually reminded to post only the very best content you're capable of, or face having it removed without warning.

If you have a forum on your site, you might be in danger of triggering this factor. To keep safe, simply be sure to foster a good culture within the forum community, and moderate it carefully to weed out spam.

Ranking sites based on how many links were pointing to them was Google's big differentiation, and was the launch-pad to their market domination.

It's an elegant system. If another site, particularly one of high standing, references your content as useful by linking to it from their own site, then it's taken as a vote of confidence, trust and relevance for your site by Google

The flaw in the system is that it quickly starts to break down when people start to game hyperlinks, linking to sites for money or linking back to their own sites in externally posted articles in an attempt to make it look more popular than it is.

The prospect can sound tempting, but if you're suffering from a penalty now and you suspect it has something to do with how you've built links in the past, study this list and see if any stand out to you.

We'll dive into the proper ratios of anchor text in Chapter 4, but for now remember that a low minority of them should be an exact-match of the keyword you’re trying to rank for.

The majority of the links pointing to your site would naturally display the site's brand name, the name of the owner, the domain name or just a "naked" URL. This is how most people link to sites when left to their own devices, and so this is what looks natural to search engines.

While swapping a link with a relevant site that your users will find useful is a good thing and should be encouraged, swapping links in excess is an indication of foul play.

For example, a web-savvy taxi company would legitimately want to swap links with all the hotels in the local area, to help his visitors find a place to stay when they arrive, and to help the hotels' visitors find out about him if they should need a cab.

If he swapped hundreds of links with websites about poker, embroidery, politics, and many other random niches, it would be a different outcome. These links would provide no added benefit to his customers, and would risk triggering a penalty.

Quality pages can be fleshed out from poor ones by how many other sites link to it. Any sign of manipulating this voting system is seen as a serious violation, and is sure to get you flagged.

Often, paid links are thrown up in a haphazard way, violating one or more of the other factors mentioned here, so if you have ever paid for links in the past, you'd better look into how they were done. Get in contact with the link provider, and ask for a copy of their records for your order(s).

2012 saw a big upheaval in the world of SEO. Many of the internet's biggest link networks were targeted and obliterated from Google's index overnight, which also penalised the hundreds of thousands of sites that had paid these link networks to boost their rankings.

Link networks still exist, but they are (generally) much more underground. They're kept as secret as can be, because they are targeted so heavily by the search engines. Being linked to by one of these networks is like opening yourself up to be caught in future cross-fire. It's up to them to keep from being spotted by the ever watchful eyes of the search engines, and if they’re caught, you'll pay the price. And usually, no matter how clever a network owner is, Google will catch-up with them at some point, networks just aren't worth the risk.

You don't have to be on the receiving end of a paid link to be penalised.

Just as the link networks were de-indexed for linking to people for money, so can your site. It can seem like a valid way to keep advertising space on your website, to have people pay for ad links from a particular page, but Google sees this as a clear violation of their guidelines.

If you're going to sell ad space on your site, do so legitimately, through a reputable ad network.

If yesterday you were brand new, and today ten thousand links are pointing to your home page, what does that suggest?

When you pay for a high volume of low quality links, it's common for the link builder to throw up a huge quantity all at once, using automated software.

Sometimes a sudden high velocity of links will happen for legitimate reasons, namely when something of yours goes "viral", and suddenly the whole world seems to be linking to it and sharing it.Similar to link velocity, the quality of the sites linking to you is often poor when the links are bought en masse.

The old link networks often consisted of blogs containing dire articles which covered every niche under the sun.Just as your personal reputation can be besmirched by associating with a known crook, linking your site to a site full of spam and terrible content is going to hurt your rankings too.

Usually, this happens by swapping links with said sites, or even by the malicious owners of the sites hacking into yours, and planting links in your content in an effort to boost their own rankings.

When you link to another site on every single page of your site, or if another site links to you in this way, it can be seen as likely manipulation.

There are legitimate examples of this for this, for example blogroll links, links back to web designers or developers etc.

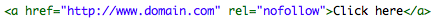

Sometimes, you want to have a particular link on every page for the purposes of moving leads through your sales funnel. In this instance, you can add "rel=nofollow" to the HTML of the links in question.

Any link that is hidden to a user viewing your site is treated as suspicious.

This offense can be made by matching a link's colour to the background it sites on, or by hiding a link in the script files. These are the sorts of things that search engines have become excellent at spotting.

While linking to affiliate products is a perfectly legitimate business model in the world of online marketing, cramming a page full of affiliate links sets off alarm bells and could incur a penalty, especially on thin-content sites.

Whether pointing to an internal page or to a page on another site, if following a link brings up a 404 error page, it definitely won't be helping your user experience.

The internet is an ever-changing beast, and broken links will happen. There are plenty of tools available that can sweep your site for broken links when you’d like to quickly check, such as Xenu's Link Sleuth, and BrokenLinkCheck.com.

Search "bots" look at the code of your site, and "crawl" through as they analyse the content and the links therein.

Technical site issues are rarely talked about, but your code is the foundation of your online presence. Be sure it's running smoothly, and you’ll stand a much better chance of lasting over time.

An XML sitemap is not a requirement, but there's no reason not to create one, as it helps inform Google every time you post new content, prompting it to be crawled more quickly than otherwise.

Servers may crash from time to time, but if the problem is not handled quickly, or if it happens regularly, the search engines notice and take it to mean neglect. They can't keep searchers happy by sending them to a 404 page, so if your site does crash, get it back up and running as a number one priority.

In 2010 Google publicly announced that they look at the speed of a site when determining where to rank it.

Over time a site can be bogged down by adding extra code, or plugin after plugin, and large files such as images and videos. To maintain fast loading times, keep your larger files on a separate cloud based storage server. Especially for large sites, slower site speeds will severely affect your indexation.

We would recommend the incredibly affordable Amazon S3 cloud storage service, or Cloudflare which is easily configured.

Sins of the past can come back to haunt you if you've just picked up a new domain. Even if it has expired, there may be a ton of spammy links pointing to it, which may create problems further down the line.

We'll look into how to vet the link profile of any site in the next chapter.

Anyone can report your website as spam if they so wish. This can be genuine or done with the malicious intent of a competitor who doesn't mind playing dirty. It doesn't make a difference.

It's nothing to worry about if your site is squeaky clean, since the report will only flag your site, not penalise it. If, however, you've been fortunately scraping by under the radar while quietly breaking the rules, being reported could mean you're flagged for a review.

Perhaps the ultimate violation in the online world, hacking becomes a greater risk the more well-known and successful your site becomes. But also using popular a popular CMS such as Wordpress and not keeping your software or plugins up to date can also make you a target.

Get your site security in order and prepare for it as best you can. If you've already been hacked, and the hacker has left spammy links, or hidden text, you may not know about it right away.

The only thing to do, if you'e sure you’ve stuck to the rules in every way and your penalty could only be due to an SEO attack, is to conduct a comprehensive on-site audit, explained in chapters 3 and 4.

Cloaking is a technique by which the search engine bots are presented with different content than what appears in the user's browser window.

There are two types:

1. IP Delivery Cloaking

Delivering different content based on IP address is something the search engines do all the time to present users with geo-targeted content. However, it can also be used to differentiate human user from search bot.

2. User-Agent Cloaking

Both browsers and crawlers have unique user-agents assigned to them. This helps make websites slightly better tailored to different browsers when necessary, and of course can also be used to present totally different content to browsers and crawlers.

Cloaking is an old practice. If it is affecting your site, it was likely to have been put in place a long time ago.

Spencer Haws of NichePursuits.com performed a case study for his readership of how one would rank a brand new website. He carried out keyword research, picked a good niche, and built his site. It started to climb in Google, and it looked like an unhindered stroll to the top until he sent out this update on his blog.

In it, Spencer explained that his site had suddenly dropped from page 2 to page 24 for his main keyword.

Not only that, but the page which was ranking there was not his home page. Clearly, he had suffered a penalty.

He was perplexed, since he had used exactly the same techniques to rank this site as with any of his other niche sites.

The one difference was the amount of Amazon affiliate links he had placed on one of his pages.

On that page he had developed a very useful table which compared prices and attributes of 50 products in his chosen niche. His visitors loved it, but Google was not so thrilled. On each row of his table, he put in an affiliate link in text, and in the product image, making a total of 100 affiliate links on his home page!

After moving his affiliate links off his home page and onto a sub-page, which he then prevented Google from crawling by altering his Robots.txt file to exclude the "money" page, his rankings returned within 9 hours.

Note: That sort of speed is only possible with an algorithmic penalty.

Let's recap the biggest takeaways of this chapter.

Now you have a clear understanding, or at least a very good guess, of what caused your penalty in the first place. Next we can start the process of fixing it. In the next chapter we see how to quickly find and collect the data you need to know exactly what needs changing and in what way. It's time to measure what’s actually happened, to gain clarity on the situation so when we go in to fix the problem, we don’t waste any time or money on the inconsequential.

Ready? Let's keep it moving...

Want do download a PDF version of the guide?